Is there anything to be done when we get that nagging suspicion that some unobserved factor is driving our results? Should we just send the paper off to a journal and hope for the best...or can we learn something about omitted variable bias using the variables we do have in the data?

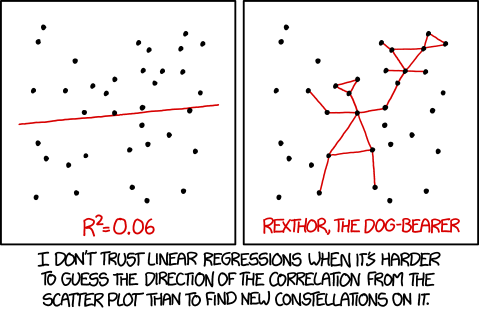

One commonly used trick is simply to check if estimates of our coefficient of interest change very much when more and more control variables are added to the regression model. To take a classic example, if we want to estimate the impact of schooling on earnings, we may be concerned that higher ability people have more formal education but would earn higher wages regardless of schooling. To address this issue, researchers may control for parental education, AFTQ score, number of books in the childhood home, and the list goes on and on. If the estimated impact of schooling doesn't change very much as more and more of these variables are added, we might feel reasonably confident that the estimated treatment effect is not severely biased. Is it possible to control for everything? No. Nevertheless, if our main results don't change very much when we control for more and more things, then our identification strategy, whatever it might be, is probably pretty good.

Sounds nice, right? I do this kind of thing all the time. But what does it mean to "not change very much"? And shouldn't adding some controls mean more than others? Emily Oster has a forthcoming paper in the Journal of Business Economics and Statistics that formally connects coefficient stability to omitted variable bias. The key insight is that it's important to take into account both coefficient movements and R-squared movements. She even provides Stata code on her webpage for performing the necessary calculations. I have no idea if her technique will catch on, but even if you never use her code, I recommend reading the paper just for the very clear discussion of omitted variable bias.

Happy Holidays!